Finding the right words

Context

Finding the right words is a hard problem for large language models (LLMs). In this post, we review the fundamental pieces that help LLMs choose appropriate words during text generation.

Autoregressive LLMs generate text one token at a time. At each step, the model predicts a probability distribution over its entire vocabulary, which can contain tens of thousands of tokens. The generation process then needs to select the next token based on this distribution.

Simply choosing the highest-probability token often leads to repetitive text. Therefore, various decoding strategies are employed to balance coherence, diversity, and task-appropriateness in the generated text.

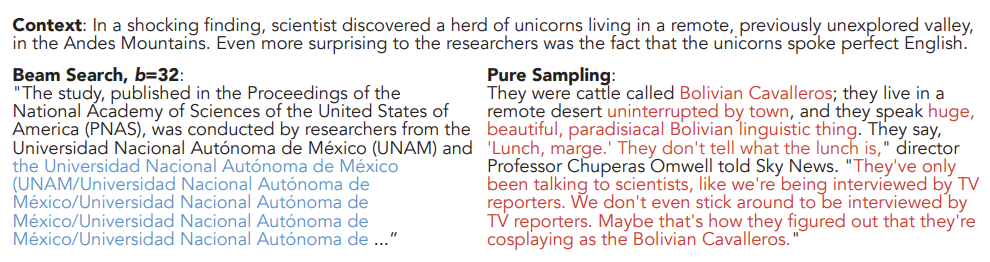

Here’s an image from Holtzman et al. (2020) to illustrate the problem:

As you can see, these decoding strategies significantly influence the quality and relevance of the output, making them crucial for optimizing LLM performance across different applications. We’ll explore popular techniques like temperature scaling, top-k sampling, and top-p (nucleus) sampling, discussing their mechanisms and impacts.

Preliminaries

Logits

A logit is nothing but a number that tells you how likely something is going to happen versus not. Think of logits as a way to stretch out probabilities, making very likely and very unlikely events easier to distinguish.

- Logits map probabilities to

- Logit = 0 means a 50-50 chance of something happening, i.e. .

- A negative logit means

- A positive logit means

Why do we use logits?

- Logits cover a wider numerical range without loss of precision

- Logits simplify the optimization process

- Logits help with training the model effectively using techniques like Gradient Descent

An autoregressive LLM outputs a distribution of logits over a vocabulary in each time step. Sampling parameters determine how to pick the next word from this distribution.

Sampling Words

top-k

top-k is a hard filter. Here I decide “I want only the top k tokens to be considered in each step” before I do anything else. These top-k tokens are selected based on sorting the logit outputs. This is static in each time step.

The presence of flat output distributions makes the use of a small k in top-k sampling problematic, while the presence of peaked distributions makes large k’s problematic [1].

Temperature

After the top-k step, we apply temperature scaling. The term temperature is borrowed from Statistical Physics and has nothing to do with language. The analogy lies in the idea that temperature, controls the “energy” or “uncertainty” of the system represented by a probability distribution.

In our problem of finding words, remember the logit output in each step? We now divide each logit value by a temperature , ranging from 0 to .

- = 1, means the system is unaffected. Everything remains the same.

- A high brings logits closer to 0. Remember what a logit of 0 meant? Everything is more equally likely now which means I can say (or sample from) many many things.

- A low shifts logits further from 0. Now everything is more opinionated as the logits are further exaggerated, which means I will now pick from the most opinionated words.

Luke Salamone’s blog has a great guide to play with temperature values to get an intuitive sense of what it does [2].

top-p

top-p sampling, also known as nucleus sampling, selects the smallest set of tokens whose cumulative probability mass exceeds a predefined probability p threshold.

This method dynamically adjusts the number of tokens considered for sampling based on their cumulative probability until the probability mass reaches p.

Holtzman et al. found that nucleus sampling obtains the closest perplexity to human text [1].

To calculate probabilities, we first need to go from logits to probabilities. We apply the softmax operation over logit values after top-k filtering and Temperature scaling steps.

Note that whatever probability masses was lost in top-k step is now redistributed among the other tokens.

Finally, we sort the probability values, cumulatively add the probability scores from top to bottom and STOP when the score exceeds a certain top-p threshold (let’s say - 0.9).

This now is my final set of words to continue forming my sentence.

Repetition Penalty

Keskar et al. introduce another form of penalty called repetition penalty [3],

is the list of already generated tokens. They found that using a greedy sampling and ≈ 1.2 yields a good balance between truthful generation and lack of repetition. In passing, they note that this approach succeeds only if the model has learned a sufficiently reliable distribution.

Frequency & Presence Penalties

ChatGPT API has similar frequency and presence penalites to suppress repetition.

The presence penalty is a one-off additive contribution that applies to all tokens that have been sampled at least once and the frequency penalty is a contribution that is proportional to how often a particular token has already been sampled.

The exact modifications to the original logits mu[j] for the j’th token (logits are shifted to the left):

mu[j] \

- c[j] * alpha_frequency # proportional to how often a particular token has already been sampled

- float(c[j] > 0) * alpha_presence # one-off additive contribution that applies to all tokens that have been sampled at least oncec[j]- how often that token was sampled prior to the current positionfloat(c[j] > 0)= 1 ifc[j] > 0and 0 otherwise (indicator function)

ChatGPT’s API docs provide some usage tips:

- To just reduce repetitive samples somewhat pick 0.1 to 1

- To strongly suppress repetition, increase coefficients up to 2, but this can noticeably degrade the quality of samples.

- Negative values increase the likelihood of repetition.

Summary

- top-k is static, always yielding k tokens, while top-p varies based on output distribution.

- top-k and top-p control token diversity and local coherence; temperature influences overall fluency, creativity, and global coherence.

- top-k limits the token pipeline at the start, potentially improving coherence by focusing on most probable tokens.

- Indicator variables based on generated tokens can control repetition:

alpha_presenceshifts logits left for appeared tokensalpha_frequencyapplies a harder penalty proportional to token frequency

- A brainstorming tool might do well with a high temperature, high top-k and a high top-p, while a factual question-answering system might perform better with low top-k and low temperature.

- These parameters balance coherence, repetition, and creativity in decoding steps.

References

[1] A. Holtzman, J. Buys, L. Du, M. Forbes, and Y. Choi, “The Curious Case of Neural Text Degeneration.” arXiv, Feb. 14, 2020. Available: http://arxiv.org/abs/1904.09751. [Accessed: Jul. 07, 2024] ↩︎

[2] L. Salamone, “What is Temperature in NLP?🐭,” Luke Salamone’s Blog, Apr. 02, 2021. Available: https://lukesalamone.github.io/posts/what-is-temperature/. [Accessed: Jul. 22, 2024] ↩︎

[3] N. S. Keskar, B. McCann, L. R. Varshney, C. Xiong, and R. Socher, “CTRL: A Conditional Transformer Language Model for Controllable Generation.” arXiv, Sep. 20, 2019. doi: 10.48550/arXiv.1909.05858. Available: http://arxiv.org/abs/1909.05858. [Accessed: Jul. 08, 2024] ↩︎